CRO & Experimentation

Why A/B Testing Is Important: Top 10 Benefits with Examples

Learn why A/B testing is important for e-commerce brands. Find out how it lifts conversion rates, reduces risk, and helps you optimize faster with examples.

Gaurav Rawat

Jan 7, 2026

You spend thousands on paid traffic every month. Shoppers click your ads, land on your site, and then leave without buying. You tweak headlines, adjust button colors, and rearrange product grids based on gut feeling. But you're not sure what actually works.

That’s where A/B testing comes in, replacing guesswork with proof. It shows you exactly what drives conversions, what stops them, and how to get more from every visitor without spending another dollar on ads. Data shows that testing even basic changes to landing pages leads to about 30% better conversion on average.

In this guide, we break down why A/B testing is important, what results you can expect, and how to make testing work without all the complexity. You'll also see how modern platforms make testing faster and smarter by adapting experiences in real time. So, let’s get started.

Key Takeaways

Replace assumptions with data: A/B testing shows you what actually drives conversions instead of relying on opinions or trends.

Increase revenue from existing traffic: Small wins from testing add up to significant lifts in conversion rate, AOV, and repeat purchases.

Reduce risk before scaling: Test changes on a small audience before rolling them out site-wide to avoid costly mistakes.

Optimize every part of the funnel: From landing pages and PDPs to cart flows and checkout, everything can be tested and improved.

Speed up iteration cycles: Modern tools let marketers launch and test without dev dependencies or long deployment cycles.

Continuous improvement beats one-time fixes: Regular testing creates a culture of optimization that compounds over time.

Better user experience drives loyalty: Testing helps you build experiences shoppers actually want, leading to higher engagement and retention.

Platforms like Nudge make testing smarter: AI-powered personalization adapts experiences in real time while continuously testing what works best.

What is A/B Testing?

A/B testing compares two versions of a page, element, or experience to see which one performs better. You show version A to half your visitors and version B to the other half. Then you measure which version drives more conversions, clicks, or purchases.

For example, you might test two different headlines on a PDP (Product Detail Page). Version A says "Free shipping on orders over $50," while version B says "Get it delivered free." You run both versions for a week, and version B drives 12% more add-to-carts. Now you know which message works better, and you can roll it out to everyone.

But the real question is not whether to test. It's why A/B testing matters so much for growth.

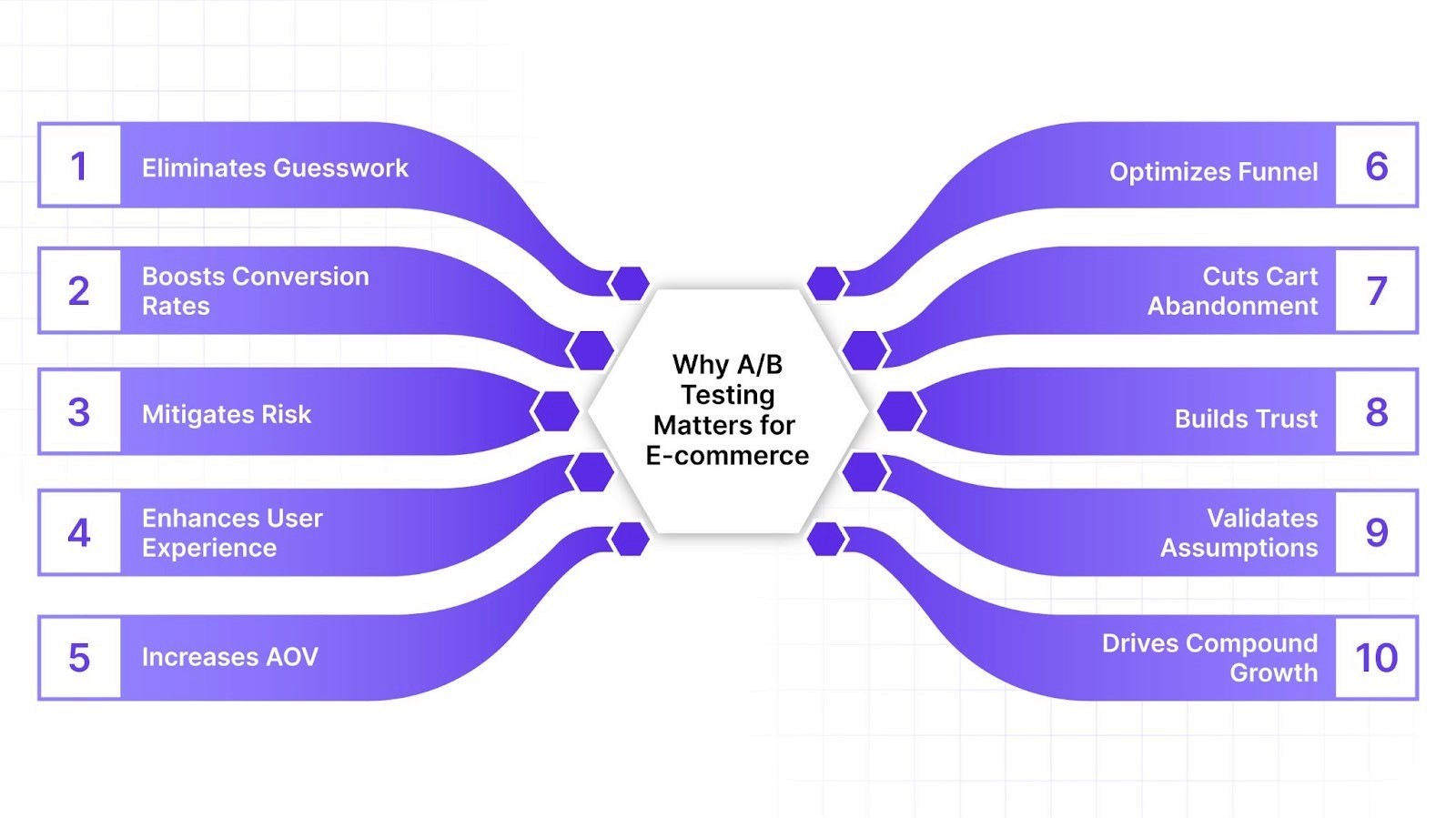

Why A/B Testing is Important for E-commerce Brands?

A/B testing gives you a clear, data-backed way to improve results without increasing ad spend or traffic. For high-growth DTC brands managing tight margins and expensive customer acquisition, testing is how you get more value from every click.

Here's what makes A/B testing critical for e-commerce growth:

1. Replaces Guesswork with Proof

You stop debating which headline or layout is better and start seeing what actually works with your audience. Testing removes assumptions and shows you what shoppers respond to based on their actual behavior.

Example: Grene, an agriculture e-commerce brand, revamped its mini cart design through testing instead of relying on assumptions about what looked better. With a data-driven approach, they saw that their e-commerce conversion rate increased from 1.83% to 1.96% and the total purchase quantity doubled.

Tip: Start by testing elements where your team has the strongest disagreements. These are usually the areas where data will have the biggest impact on decision-making.

2. Increases Conversion Rates from Existing Traffic

Small lifts in conversion can mean thousands in additional revenue. You get more from the traffic you already have without spending another dollar on ads.

Example: Clear Within, a skincare brand, tested moving their "Add to Cart" button from below the fold to above the fold on product pages. This simple placement change delivered an 80% increase in add-to-cart rates in just three days.

Tip: Focus testing efforts on high-traffic pages first. A 2% lift on a page with 50,000 monthly visitors creates more impact than a 10% lift on a page with 1,000 visitors.

3. Reduces Risk Before Making Big Changes

Testing lets you validate changes on a small segment before rolling them out site-wide. If a new checkout flow performs poorly in testing, you avoid the damage of launching it to everyone.

Example: Wallstickerland, a Danish e-commerce store, tested placing their newsletter signup pop-up on the left side versus the right side of the screen. They found that positioning it on the right side generated 17% more signups than the left placement, allowing them to validate the change before implementing it across all pages.

Tip: Always test major changes that affect checkout, navigation, or core user flows. The cost of getting these wrong is too high to rely on assumptions.

4. Improves User Experience Based on Real Behavior

Testing shows you what shoppers actually prefer, not what you think they want. Better experiences lead to higher engagement, fewer bounces, and more repeat purchases.

Example: SmartWool tested two product page layouts with 25,000 visitors. They switched from a mixed-size image layout to a uniform grid layout where everything appeared organized and consistent. The cleaner grid design led to a 17.1% increase in average revenue per visitor.

Tip: Use heatmaps and session recordings alongside A/B tests to see why certain variations win. This helps you apply learnings to other pages.

5. Drives Higher AOV with Smarter Offers and Bundles

Testing different bundle placements, discount thresholds, or upsell messages helps you find what increases order value without feeling pushy.

Example: SiO Beauty tested adding a button to their side cart that allowed customers to upgrade single products into recurring subscriptions. This simple addition led to a 16.5% increase in subscription revenue by making the upgrade option visible at the right moment.

Tip: Test bundle placement across PDPs, cart pages, and checkout. The same offer can perform differently depending on where shoppers see it in their journey.

6. Optimizes Every Stage of the Funnel

From landing pages and PDPs to cart flows and checkout, you can test and improve every touchpoint in the shopper journey. Each small improvement compounds into bigger results.

Example: Arono, a weight loss app company, tested different background images in their newsletter signup pop-ups. They discovered that one background converted 53% better than another, demonstrating how small visual changes at any funnel stage can significantly impact signup rates and overall performance.

Tip: Map out your full funnel and identify the biggest drop-off points. Start testing at the stage where you're losing the most potential customers.

7. Reduces Cart Abandonment

Testing exit-intent offers, checkout flow changes, and urgency messaging can recover significant revenue from shoppers who were about to leave.

Example: Sport Chek redesigned their free shipping message from a simple statement to "Congratulations, You Qualify for Free Shipping!" with red text in a red-outlined box. The eye-catching formatting and celebratory language increased purchases by 7.3%.

Tip: Test different incentives and messages in exit-intent popups. Free shipping thresholds, limited-time discounts, and social proof can all work differently depending on your audience.

8. Builds Trust with Social Proof and Security Elements

Testing where and how you display trust signals helps you figure out what makes shoppers feel confident enough to buy.

Example: True Botanicals added social proof elements to their product detail pages through A/B testing. The winning variation achieved a $2M+ estimated ROI increase and a 4.9% site-wide conversion rate by placing reviews and ratings where shoppers needed them most.

Tip: Test different types of trust signals, including customer reviews, security badges, return policies, and testimonials. Placement matters as much as the element itself.

9. Validates Assumptions Before Full Rollout

Testing challenges conventional wisdom and shows you what actually works for your specific audience, not what works in general.

Example: Dewalt tested the CTA button text on their hand drill product page. They found that "Buy Now" received 17% more clicks than "Shop Now." This proved that simple, direct language often outperforms more casual alternatives, even when conventional wisdom suggests otherwise.

Tip: Test the "best practices" you read about. What works for other brands might not work for your audience, and testing is the only way to know for sure.

10. Creates Compound Growth Over Time

Regular testing doesn't just deliver one-time wins. Small improvements stack on top of each other and create significant growth over months and years.

Example: Get Inspired, a sports and fashion webshop, ran consistent email collection campaigns with weekly giveaways. Their popups have been viewed over 3.9 million times and generated more than 150,000 leads, achieving a 3.8% conversion rate through continuous testing and optimization.

Tip: Create a testing calendar and commit to running at least one test per week. Consistency matters more than the size of individual tests.

Now these benefits actually show up when you select the right A/B testing approach for each situation.

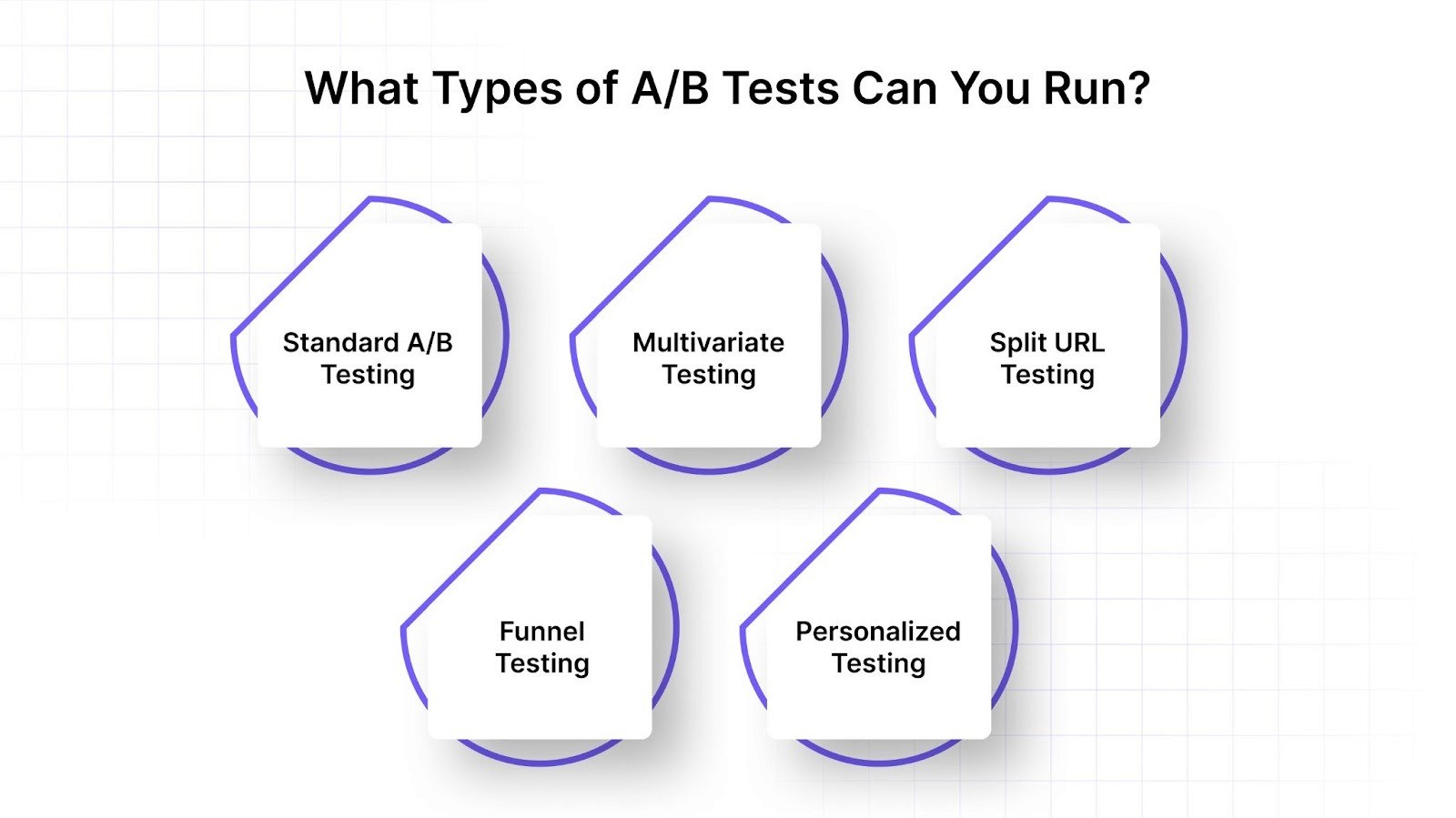

What Types of A/B Tests Can You Run?

Not every test needs the same setup. A simple headline change requires different testing than a complete page redesign. The test type you choose depends on what you're changing, how much traffic you have, and how quickly you need results.

Test Type | Best For | Traffic Needed | Complexity |

Standard A/B Testing | Single element changes (headlines, CTAs, images) | Low to Medium | Simple |

Multivariate Testing | Multiple elements at once (combinations) | High | Advanced |

Split URL Testing | Major page redesigns or layout overhauls | Medium to High | Moderate |

Funnel Testing | Multi-page journey optimization | High | Advanced |

Personalized Testing | Segment-specific experiences | Medium to High | Moderate |

Here's when to use each type of test:

Standard A/B testing: You compare two versions of a single element, like a headline, CTA button, or product image. This is the simplest and most common type of test. For example, you might test whether a "Shop Now" button converts better than "Add to Cart" on a PLP.

Multivariate testing: You test multiple elements at once to see which combination performs best. For instance, you might test three different headlines and two different hero images simultaneously. This type of testing requires more traffic to reach statistical significance, but helps you find winning combinations faster.

Split URL testing: You test two completely different page designs by sending traffic to separate URLs. This works well when you want to test major layout changes or entirely new page structures. For example, you might test a minimalist PDP layout against a content-heavy one with reviews and specs above the fold.

Funnel testing: You test variations across multiple pages in a sequence, like testing different landing pages and PDP combinations to see which path drives more purchases. This helps you optimize the entire journey instead of just one page.

Personalized testing: You test different experiences for different audience segments based on behavior, source, location, or device. For instance, you might show first-time visitors a discount offer while showing returning visitors product recommendations based on past purchases.

Choosing the right A/B test type is important. But running it correctly matters even more.

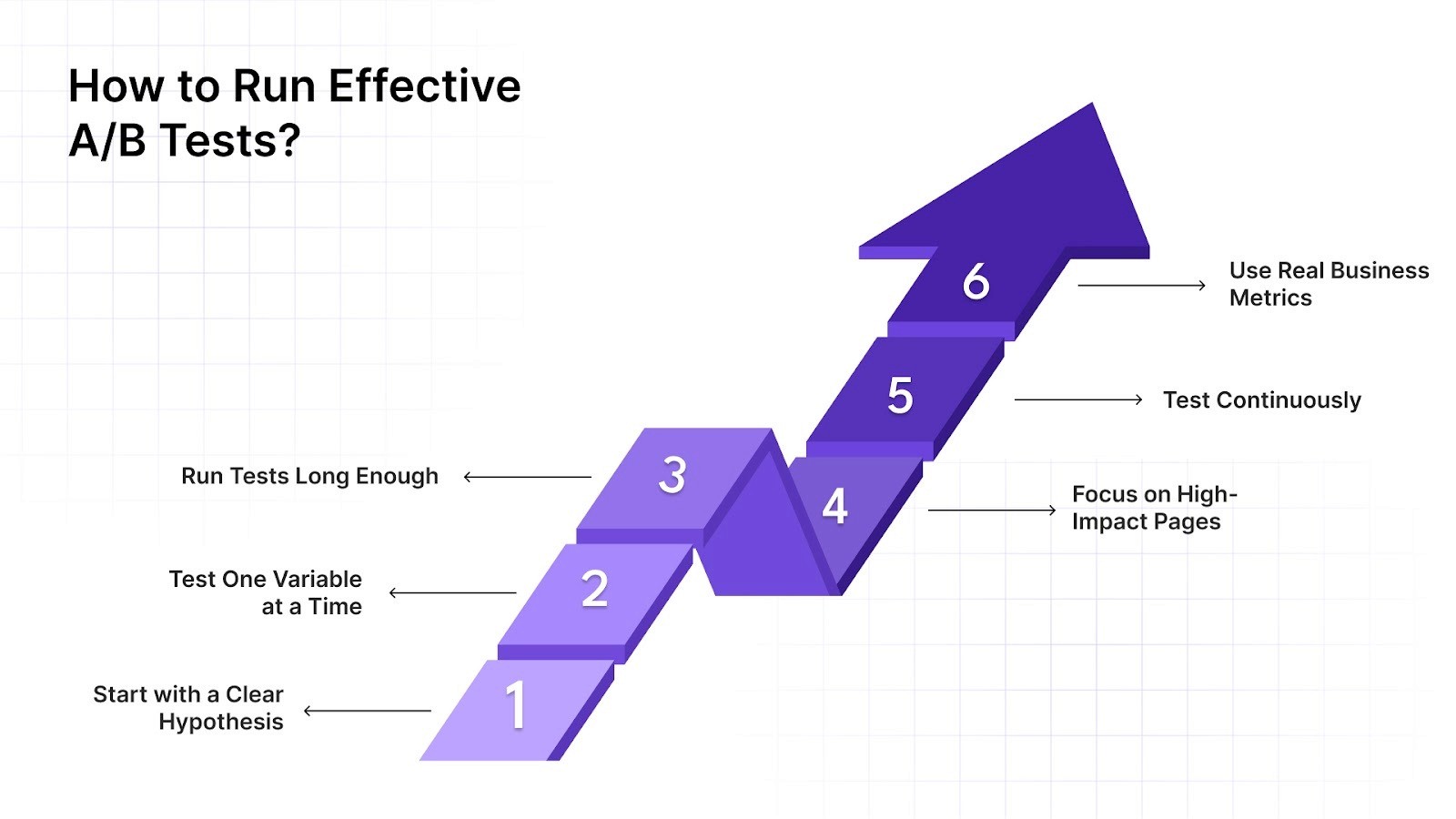

How to Run Effective A/B Tests?

Running tests that actually drive results takes planning and discipline. Here are some of the best practices and tips to follow for running effective A/B tests:

Start with a clear hypothesis: Don't test randomly. Start with a specific idea about what change will improve which metric and why. For example, "Adding urgency messaging to PDPs will increase add-to-cart rates because shoppers will feel more pressure to act fast."

Test one variable at a time: If you change the headline, button color, and image all at once, you won't know which change drove the result. Isolate variables so you can learn what actually works.

Run tests long enough to reach significance: Don't call a winner after one day. Run tests for at least one full week to account for day-of-week variations. Make sure you hit statistical significance before declaring a winner.

Focus on high-impact pages first: Start with pages that get the most traffic and have the biggest impact on revenue, like landing pages, PDPs, and cart pages. Small improvements here create big results.

Test continuously, not just once: A/B testing is not a one-time project. Build a testing calendar and run new tests every month to keep improving results over time.

Use real business metrics, not just clicks: Don't optimize for clicks if you care about revenue. Track the metrics that actually matter, like conversion rate, AOV, revenue per visitor, and purchase rate.

Now, it’s also essential to know about the common mistakes so that you can avoid them and get better results.

Common Mistakes to Avoid While Running A/B Tests

Most testing mistakes happen in the setup or interpretation phase, not in the idea itself. You might run a test for the right reason but end the test too early, change too many variables at once, or miss important patterns in your data.

Here are some of the common mistakes to avoid:

Calling winners too early: Ending a test after a few hours or days gives you unreliable results. You need enough data to confirm the result is real, not just a fluke. For instance, you might see version B showing a 15% lift after two days and want to declare it the winner. But if you run it for another week, the lift might disappear, and both versions could perform equally. Stopping too early leads to wrong decisions.

Testing too many things at once: If you change five elements simultaneously, you won't know which one drove the lift. Keep tests focused. For example, if you test a new headline, different product images, and a redesigned CTA button at the same time, you might see a 20% increase in conversions. But you won't know if it was the headline, the images, or the button that made the difference.

Ignoring mobile vs desktop differences: A headline that works on desktop might not work on mobile, where space is limited. Segment results by device to get accurate insights. For instance, a long descriptive headline might perform well on desktop but push the hero image too far down the screen on mobile, causing drops in conversion.

Not considering seasonality: Testing a discount offer during a holiday sale might show strong results that don't hold up during regular weeks. Account for seasonal effects. For example, a "Limited Time Offer" banner tested in December might show huge lifts. But when you roll it out in February, it could have almost no impact because shoppers aren't in the same buying mindset.

Stopping at the first win: Finding one winning variation is great, but don't stop there. Test the winner against a new challenger to keep improving. For instance, after finding that "Free Shipping Over $50" performs better than "Get Free Delivery," test it against "Unlock Free Shipping at $50" to see if you can push performance even higher.

Testing without enough traffic: If your page only gets 100 visitors a week, it'll take months to get reliable results. Focus tests on high-traffic pages first. For example, testing a low-traffic category page with 500 monthly visits might take six months to reach statistical significance, while testing your homepage with 50,000 monthly visits can give you answers in a week.

These mistakes are common because traditional testing makes them easy to make. The manual setup, long wait times, and dev dependencies create friction at every step. Modern platforms remove that friction by automating the hard parts and speeding up everything else.

Increase Conversions and Test Smarter with Nudge

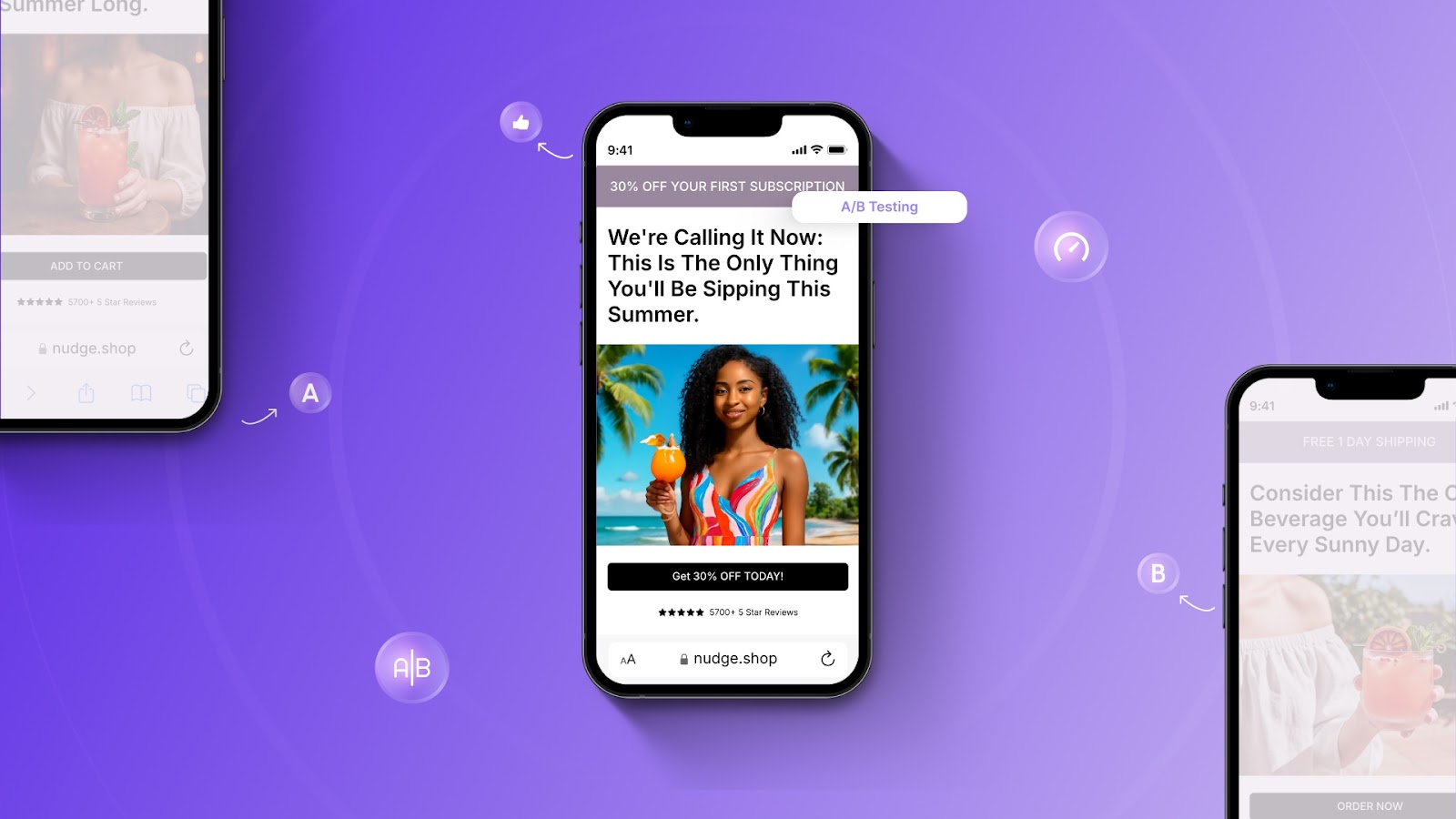

Nudge makes testing faster and smarter by personalizing experiences in real time while continuously experimenting to find what works best. Marketers launch and iterate without code, and AI adapts every surface based on shopper behavior, campaign source, and context.

Here's how Nudge helps you test and increase conversions faster:

Commerce Surfaces: Personalize landing pages, PDPs, and PLPs by campaign, source, and behavior. Test different layouts, offers, and product grids without waiting on dev cycles.

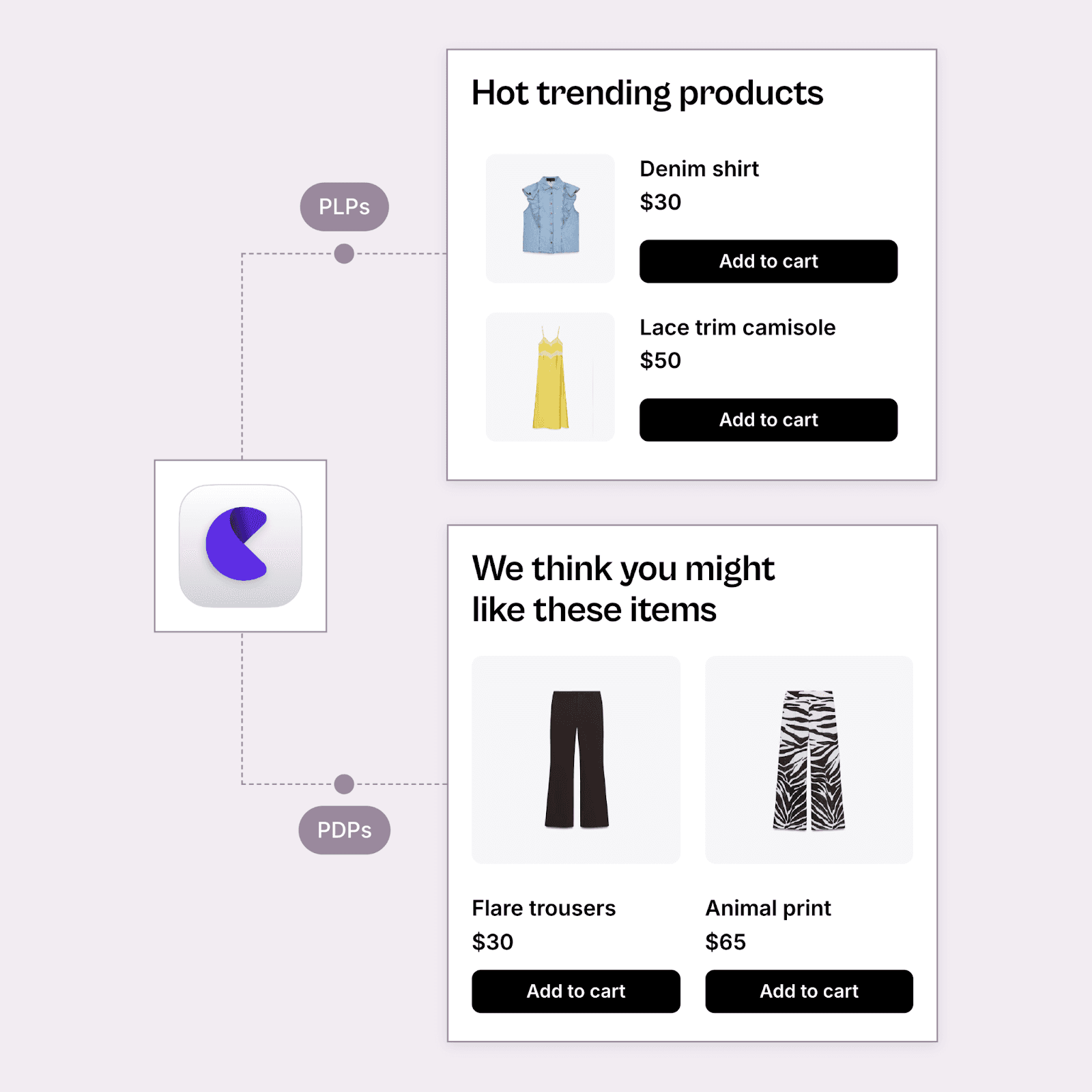

AI Product Recommendations: Show context-aware bundles and recommendations across PDPs, carts, and checkout. Test different placement strategies and let AI find what drives the highest AOV.

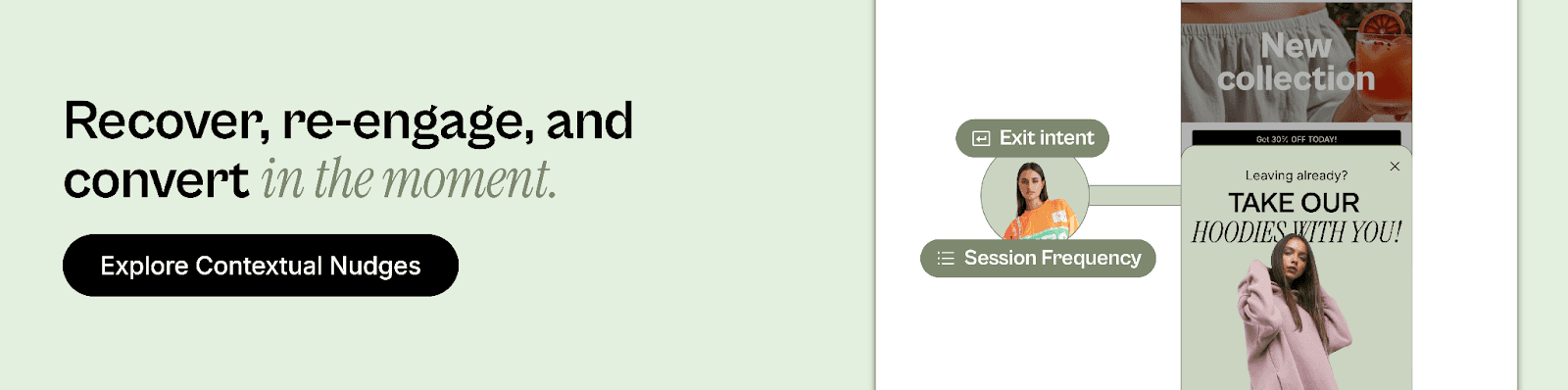

Contextual Nudges: Trigger exit-intent offers, urgency messages, and opt-in prompts based on scroll depth, time on page, and referral source. Test different formats and timing to maximize conversions.

Cart Abandonment: Launch exit-intent nudges and personalized offers to recover carts before shoppers leave. Test different incentives and messages to win back high-value sessions.

Funnel Personalization: Adapt every page from landing to checkout based on campaign creative, audience, and channel. Test full-funnel variations to see what drives the highest purchase rates.

Bundling & Recommendations: Test different bundle placements, product pairings, and offer logic to increase order value without manual setup or static rules.

High-growth brands use Nudge to test faster, learn faster, and scale what works without waiting on engineering. Every test runs automatically, and AI continuously optimizes experiences based on real shopper behavior.

Final Thoughts

A/B testing is how you turn traffic into revenue without increasing ad spend. It replaces assumptions with proof, reduces risk, and helps you build experiences shoppers actually want. The brands that test regularly see higher conversion rates, better user experiences, and stronger results across the entire funnel.

Traditional testing takes time. Waiting weeks for dev resources, running manual tests, and implementing changes one at a time makes it hard to keep up with fast-moving markets and shifting shopper behavior. Modern platforms make testing faster by letting marketers launch and iterate without code while AI continuously experiments to find what works best.

Ready to test faster and increase conversions? Nudge helps high-growth e-commerce brands personalize every shopper journey and continuously improve results without dev bottlenecks. Book a demo and see how you can grow your conversions and AOV with AI-powered testing.

FAQs

1. What is the main purpose of A/B testing?

The main purpose of A/B testing is to compare two versions of a page or element to see which one performs better. It helps you make data-driven decisions and optimize based on real shopper behavior instead of assumptions.

2. How long should you run an A/B test?

Run A/B tests for at least one full week to account for day-of-week variations and reach statistical significance. Tests with low traffic may need to run longer to gather enough data for reliable results.

3. What metrics should you track in A/B tests?

Track metrics that align with your business goals, like conversion rate, add-to-cart rate, AOV, revenue per visitor, and purchase rate. Avoid optimizing for vanity metrics like clicks if they don't drive revenue.

4. Can you A/B test multiple elements at once?

Yes, but multivariate testing requires more traffic to reach significance. If you want clear insights, test one variable at a time so you know exactly what drove the result.

5. What pages should you A/B test first?

Start with high-traffic, high-impact pages like landing pages, PDPs, and cart pages. Small improvements here create the biggest lift in revenue because they affect the most shoppers.