AI Search Visibility

AI Visibility Platform Checklist (U.S.): 12 Must-Have Features

The article explains how AI is reshaping product discovery, then outlines 12 must-have capabilities for U.S. AI visibility platforms, emphasizing multi-model prompt testing, citation-level attribution, SKU data readiness, governance, and shoppable funnels that connect AI answers to revenue.

Gaurav Rawat

Jan 23, 2026

AI search is now a product discovery channel. Consumers ask one question and expect a shortlist. That changes what “visibility” means for commerce operators.

In the U.S., the shift is measurable. Gartner predicts traditional search engine volume will drop 25% by 2026 due to AI chatbots and virtual agents Gartner search volume prediction. On the click side, Pew found that only 8% of visits with an AI summary produced a traditional search click, versus 15% without an AI summary Pew AI summaries click study. Pew also found 26% of searches with an AI summary ended with no clicks, versus 16% without Pew zero-click comparison. Ahrefs reported AI Overviews correlate with a 34.5% lower CTR for position #1 versus similar keywords without AI Overviews Ahrefs AI Overviews CTR analysis.

Commerce is already feeling the channel shift. Adobe reported that traffic from generative AI sources to U.S. retail sites rose about 1,300% year over year during Nov 1 to Dec 31, 2024 Adobe Analytics U.S. retail AI traffic growth, and about 1,950% year over year on Cyber Monday 2024 Adobe Cyber Monday AI traffic. In the same Adobe survey of 5,000 U.S. consumers, 39% said they had used generative AI for online shopping, and 53% planned to use it that year Adobe consumer AI shopping adoption.

Here’s the operational reality: AI-referred sessions can look great at the top of funnel, yet underperform at checkout. Adobe reported AI-referred visitors showed 8% higher engagement, viewed 12% more pages per visit, and had a 23% lower bounce rate than non-AI sources Adobe engagement, pages per visit, and bounce rate.

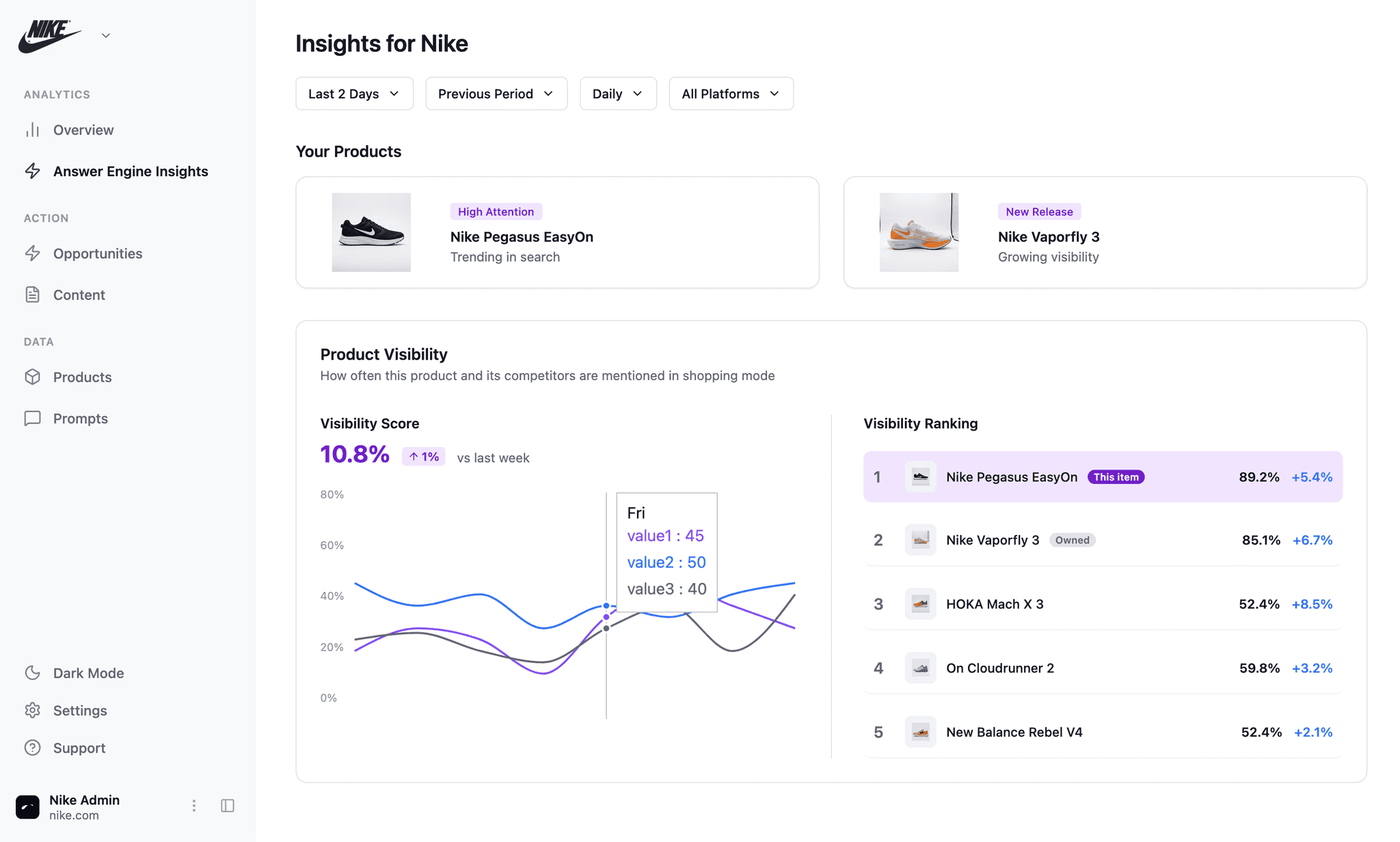

So, if you are evaluating an AI visibility platform in the United States, do not buy “monitoring.” Buy a system that measures discovery across models and turns that demand into shoppable conversion paths. That is the lens Nudge takes as an AI commerce visibility platform: Nudge AI Search Visibility.

AI Visibility Platforms: What They Are And What They Are Not

An AI visibility platform tracks how your brand and products appear in generative answers, across major AI assistants, and then helps you improve that presence through optimization workflows. It aligns with the concept of Generative Engine Optimization (GEO), which frames optimization as improving visibility in generated answers, not just ranking in blue links GEO definition in the research paper.

In practice, you should expect an AI visibility platform to help you:

Monitor mentions, citations, and how you are described across AI assistants GEO visibility concept.

Trace citations back to source URLs and content blocks GEO optimization and visibility framing.

Operationalize fixes across content and product data, including structured data and feeds Google product structured data guidance.

Connect AI-driven discovery to ecommerce conversion paths, since AI traffic can be more engaged but less likely to convert Adobe engagement and conversion nuance.

What it is not:

Not a classic SEO rank tracker. AI summaries reduce traditional clicks, so rank alone misses the new surface area Pew AI summary click impact.

Not social listening. Social tools track conversations, not generative answers and citations GEO focus on generative answers.

Not web analytics alone. Analytics can show AI referrals, but not why you were recommended, cited, or excluded Adobe AI referral reporting context.

Quick Glossary

AEO: Answer engine optimization, focused on being included in AI answers Optimization for generative answers context.

GEO: Generative engine optimization, methods to improve visibility in generative engines GEO definition.

AI Overviews / AI summaries: Search result experiences that can reduce clicks to traditional results Pew AI summaries study.

Mentions vs. citations: Mentions are brand references. Citations include linked sources. Citations matter because being cited correlates with more clicks Seer on citation correlation with clicks.

Prompt sets: A standardized library of prompts you run repeatedly across models for measurement stability GEO testing and optimization framing.

The U.S. AI Visibility Platform Checklist: 12 Must-Have Features

Use this as your AI visibility platform checklist for U.S. brands. Score each feature from 0 to 2.

0: Missing or manual.

1: Partial support, limited scale, or weak auditability.

2: Strong coverage, repeatable measurement, and workflow-ready outputs.

As you evaluate, keep the Nudge framing in mind: AI visibility is a commerce discovery and conversion problem. You need multi-model monitoring and shoppable, prompt-aligned funnels that turn AI-driven intent into revenue Nudge Shoppable Funnels.

1) Multi-Model Coverage Across ChatGPT, Perplexity, Claude, And Gemini

If discovery happens across multiple assistants, your measurement must match. You need model-by-model reporting and side-by-side comparisons, since outputs vary by model behavior and sourcing patterns GEO focus on visibility in generated answers.

How to verify: Ask for a sample report that runs the same prompt set across chatgpt, perplexity, claude, and gemini with timestamps and stored outputs Need for systematic optimization and measurement.

Nudge angle: Prioritize coverage where shopping is emerging inside assistants Nudge on AI shopping assistants.

2) Prompt-Level Testing At Scale (Branded And Product)

Prompts are the new keywords. Your platform should support large prompt libraries, repeated runs, and controls that reduce noise. Treat prompt testing like experimentation, not a one-off screenshot GEO methods and optimization framing.

Prompt library requirements: category prompts, “best for” prompts, comparisons, problem-solution prompts, and retailer prompts Optimization for generative answers context.

How to verify: Confirm you can rerun prompts, segment by U.S. geography, store versions, and export the library for governance U.S. governance context for operational rigor.

Nudge angle: Build prompt sets that map to shoppable destinations, not blog posts Nudge shoppable funnels approach.

3) Citation And Source Attribution Down To URL And Content Block

Attribution is how you turn AI visibility into action. You need traceability from an AI answer to the cited domain and page. Then you need to see what is being rewarded, so you can close gaps Why citations matter for clicks.

How to verify: Ask vendors to show which URLs drive your visibility and which competitor URLs are cited for the same prompts Citation importance context.

Nudge angle: Use attribution to route users to prompt-aligned product pages and funnels Nudge AI Search Visibility.

4) Share Of Voice And Competitive Benchmarking By Category

You need an AI share of voice baseline. Define it as the percent of answers where your brand appears versus competitors, across a defined prompt set. Segment by category, intent stage, and retailer versus brand site Need for systematic visibility measurement.

How to verify: Request a benchmark view that groups prompts by category and intent, then shows your presence versus named competitors Visibility measurement framing.

Nudge angle: Pair share of voice with funnel coverage, so visibility becomes revenue coverage Nudge shoppable conversion paths.

5) Sentiment, Attributes, And “How You’re Described” Tracking

Mentions alone are not enough. You need to track descriptors and attributes that show up in answers. For commerce, attribute accuracy matters. Wrong specs can kill conversion, even if you “win” the mention AI traffic conversion gap context.

How to verify: Ask for a report that extracts common descriptors and flags inconsistencies across runs Optimization and testing context.

Nudge angle: Treat attribute truth as a merchandising problem, not a PR problem Nudge AI search optimization guide.

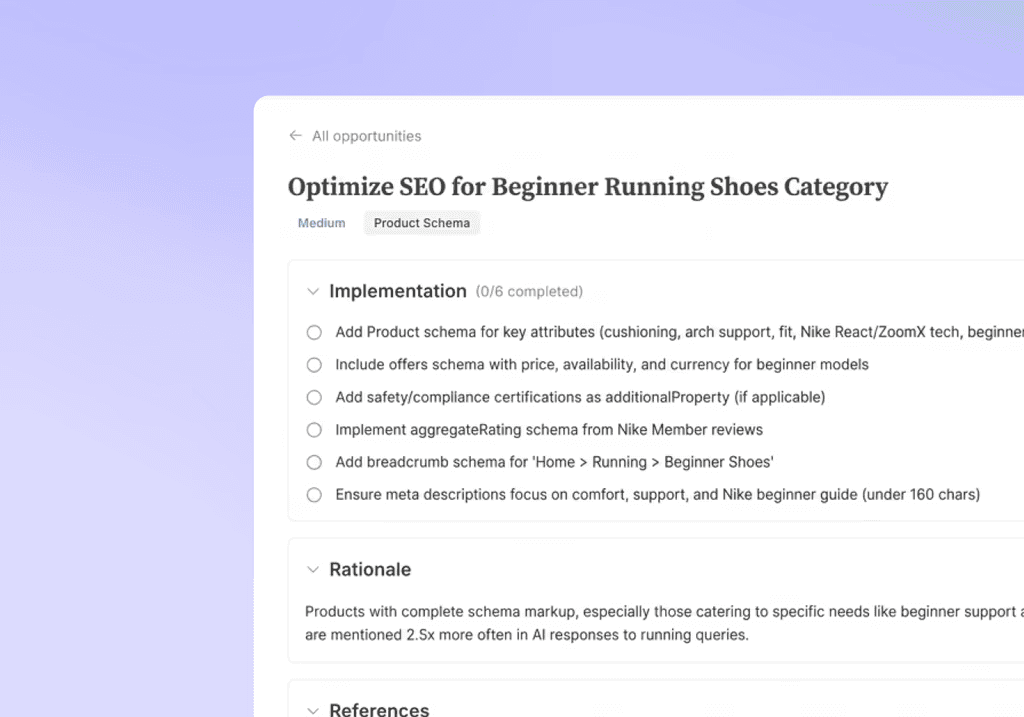

6) Recommendations That Map To Actions (Content And Product Data)

Insights must turn into prioritized work. Your platform should produce recommendations you can implement across content and product data. GEO research reports visibility improvements “up to 40%” from optimization strategies, but results vary by site, category, and competition GEO paper visibility improvement claim.

Action types to require: editorial fixes and structured data or feed fixes Structured data requirements and recommendations.

How to verify: Ask for a prioritized backlog with clear owners, expected impact rationale, and before-after measurement support Optimization measurement framing.

Nudge angle: Route recommendations into shoppable funnel builds, not just content edits Nudge funnel execution.

7) Product Data Readiness (Schema And Feeds) For AI Shopping

In U.S. commerce, product data is your eligibility layer. Require support for Product structured data and Offer details as machine-readable baseline Google product structured data documentation. Also require Merchant Center identifier coverage. Google warns missing or incorrect identifiers like brand and GTIN/MPN can limit visibility Google Merchant Center identifier guidance.

How to verify: Run a sample SKU audit. Ask for missing identifiers, price and availability mismatches, and Offer markup gaps Merchant Center visibility limits from identifiers.

Nudge angle: Make SKU truth measurable, then connect it to prompt-level visibility outcomes Nudge measurement approach.

8) AI Shopping Surface Awareness (Native Commerce In Assistants)

Assistants are adding shopping-native experiences. Perplexity describes “Buy with Pro” as a native checkout experience for Pro users in the U.S. Perplexity also states merchants with richer details like availability, reviews, and specs are more likely to be recommended. AP News reported partnerships enabling shopping experiences directly in Google Gemini, initially in the U.S. Wired reported OpenAI added shopping features to ChatGPT.

How to verify: Require reporting that tags answers as informational versus shopping or recommendation oriented, then tracks your inclusion by type Perplexity shopping experience context.

Nudge angle: Treat assistants like new retailers. Merchandising rules apply Nudge on AI shopping surfaces.

9) Conversion Connection: From AI Visibility To Shoppable Funnels

Most tools stop at “you were mentioned.” That is not enough. You need to connect AI visibility to post-click performance, because AI traffic can be more engaged but less likely to convert.

What to require: UTM support, landing experience testing, and the ability to build prompt-aligned, shoppable destinations Nudge shoppable funnels.

How to verify: Ask for a workflow that takes a high-volume prompt cluster and produces a dedicated landing path with measurement Nudge AI visibility workflow.

Nudge angle: Close the AI conversion gap by matching intent to the first click Nudge conversion paths.

10) Workflow Integrations (SEO, Analytics, Experimentation)

An AI visibility platform must fit your stack. Require integrations and exports that support operational loops. Also require governance features, because U.S. teams need auditability across cross-functional workflows.

Integrations to require: analytics, experimentation, CMS, product feed systems, and ticketing tools Structured data operational dependencies.

Governance to require: role-based access, audit logs, and export APIs for agencies and enterprise teams Governance drivers from U.S. privacy landscape.

Nudge angle: Push insights into build systems, so you ship fixes weekly Nudge on checking AI brand visibility.

11) Governance, Privacy, And U.S. Compliance Readiness

U.S. compliance is a patchwork. Perkins Coie describes a growing set of state consumer privacy laws, with additional states enforcing in 2026, including examples like Indiana, Kentucky, and Rhode Island. Your platform should support data minimization, retention controls, and PII handling aligned to your policies.

Also treat reviews and testimonials as a compliance surface. The FTC announced a final rule banning fake reviews and testimonials in August 2024, effective October 21, 2024

How to verify: Ask for data retention settings, PII controls, audit logs, and documentation on review content handling FTC rule context.

Nudge angle: Treat governance as a prerequisite for scaling prompt libraries and SKU audits across teams Nudge platform context.

12) Measurement Quality: Freshness, Reproducibility, And Auditability

AI outputs change. Models update. Citations shift. If you cannot reproduce results, you cannot manage performance. Require run logs, prompt versioning, timestamps, and stored outputs so you can audit changes over time.

How to verify: Ask to export run logs, prompts, and outputs. Ask how the platform handles variance across reruns Need for repeatable methods.

Red flags: opaque scores, no reruns, no prompt library export, and no way to validate citations Why validating citations matters.

Nudge angle: Use auditability to connect visibility changes to shipped fixes and funnel outcomes Nudge funnel measurement.

Quick “Best For / Watch-Outs” Matrix: U.S. Commerce Teams

Team Type | Needed Features | Common Pitfalls | Buying Motion |

|---|---|---|---|

Mid-Market DTC | Multi-model coverage, prompt testing, SKU audits, and shoppable funnels | Buying monitoring without attribution or conversion tooling | Start with a category prompt set and 25-50 SKUs, then expand |

Enterprise DTC | Governance, auditability, integrations, and repeatable measurement | Inconsistent measurement and no run logs | RFP-led evaluation with security and compliance review |

Multi-Brand Retailers | Offer accuracy, identifiers, and feed quality at scale | Price and availability mismatches that break trust | Pilot by department, then roll out by taxonomy |

Agencies | Export APIs, prompt library portability, and client-ready reporting | Reporting without clear actions and owners | Standardize prompt sets and reporting templates across clients: Nudge GEO guide |

30-Day Evaluation Plan: RFP-Ready

Run a fast, controlled evaluation. Treat it like a growth experiment.

Week 1: Define Prompt Sets, Categories, And Competitors

Pick 2 to 3 categories. Map to your revenue priorities.

Build a prompt library. Include “best for,” comparison, and retailer prompts Prompt-driven optimization framing.

Select 5 competitors per category. Keep it stable for the pilot.

Week 2: Run Baselines Across ChatGPT, Perplexity, Claude, And Gemini

Run the same prompt set across the four models. Store outputs and timestamps. Nudge does this automatically daily.

Capture mentions, citations, descriptors, and source URLs Citations and click correlation.

Segment informational versus shopping-style answers.

Week 3: Implement 3 To 5 Fixes (Content And Data)

Ship 1 to 2 content changes aligned to citation gaps Optimization strategies context.

Ship 1 to 2 product data changes. Fix identifiers and Offer details Identifier correctness and visibility.

Build 1 prompt-aligned shoppable landing path to reduce the conversion gap.

Week 4: Measure Lift, Then Decide

Rerun the same prompt set. Compare share of voice and citations.

Track AI-referred engagement and conversion changes.

Decide based on auditability and action throughput, not dashboards.

RFP Question Bank Mapped To The 12 Features

Multi-model coverage: Show the same prompt set across chatgpt, perplexity, claude, and gemini with timestamps Systematic measurement requirement.

Prompt testing: Can we export prompt libraries and rerun with versioning Prompt-driven optimization context?

Attribution: Provide URL-level citation reports and competitor citation gaps Why citations matter.

Product data: Do you audit GTIN/MPN/brand and Offer fields Merchant Center identifiers?

Governance: Provide retention, PII controls, and audit logs aligned to U.S. privacy complexity U.S. privacy patchwork.

Reviews compliance: How do you support compliant review and testimonial practices given the FTC rule effective Oct 21, 2024 FTC fake reviews rule?

How Nudge Approaches AI Visibility Differently: Visibility Plus Conversion

Most platforms stop at measurement. Nudge treats AI visibility as a commerce system. You measure discovery, fix representation, and convert intent through shoppable funnels.

Multi-model discovery measurement: Track how you show up across assistants, then prioritize what to fix Nudge visibility checking workflow.

Prompt-aligned shoppable funnels: Match the prompt’s intent to a landing path built to convert Nudge Shoppable Funnels.

SKU-level readiness: Treat identifiers and Offer accuracy as visibility constraints, not back-office details Google on identifiers limiting visibility.

Operator-first playbooks: Use practical workflows for how to check visibility and how to optimize for AI answers Nudge AI search optimization guide.

If you want a deeper primer on GEO, use Nudge’s guide to generative engine optimization Nudge GEO guide.

Get A U.S. AI Visibility Audit: Prompt Set Plus SKU Sample

If you are evaluating platforms, we're ready to give you a free audit to get things going!

You will receive:

A sample prompt library tailored to your categories

A competitor benchmark snapshot using share of voice logic

A SKU data readiness check focused on identifiers

Funnel recommendations to reduce the AI conversion gap

You provide:

Your top categories and priority products.

10 SKUs to audit for product data readiness.

5 competitors per category.

Ready to improve AI Search visibility? Get a free audit with us now!

FAQs: AI Visibility Platforms For U.S. Brands

What Metrics Should An AI Visibility Platform Track?

Track mentions and citations, share of voice by prompt set, sentiment and descriptors, source URLs, and change over time . Citations matter because being cited in an AI Overview correlates with more clicks. Seer reported 35% more organic clicks and 91% more paid clicks when a brand is cited versus when it is not, with correlation noted. Also account for click suppression in AI summary contexts, since Pew found fewer traditional clicks when AI summaries appear.

How Do I Test AI Visibility Across ChatGPT, Perplexity, Claude, And Gemini Fairly?

Standardize your prompt sets, rerun them repeatedly, store timestamps, and version prompts so you can reproduce results. Require audit logs and stored outputs so you can validate citations and compare variance across runs.

How Does AI Visibility Relate To Ecommerce Revenue?

The chain is simple: AI answer to click to session to conversion. The problem is that AI-driven sessions can behave differently. Adobe reported AI-referred visitors showed higher engagement, more pages per visit, and lower bounce rate, yet were 9% less likely to convert than other channels at the time of reporting. Close the gap by building prompt-aligned, shoppable landing experiences that match the intent behind the prompt.

Do Structured Data And Product Feeds Matter For AI Assistants?

Yes. Use Product structured data and Offer markup as your machine-readable baseline. Also ensure correct identifiers like brand and GTIN/MPN, because Google warns missing or incorrect identifiers can limit visibility. For assistant-native shopping, Perplexity says richer product details like availability, reviews, and specs increase recommendation likelihood.