AI Search Visibility

How to Measure AI Search Visibility and Boost Rankings in 2026

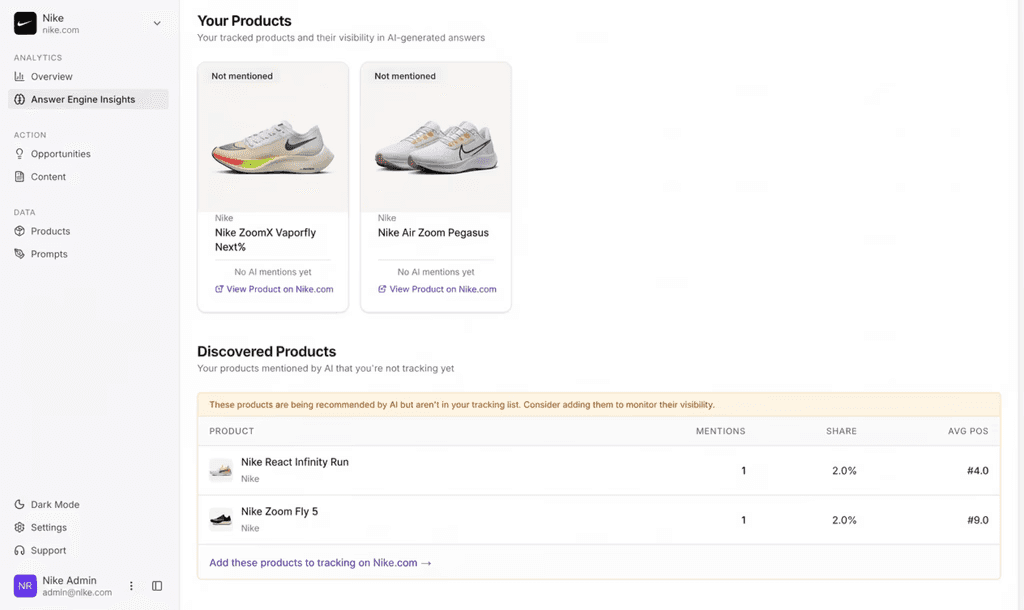

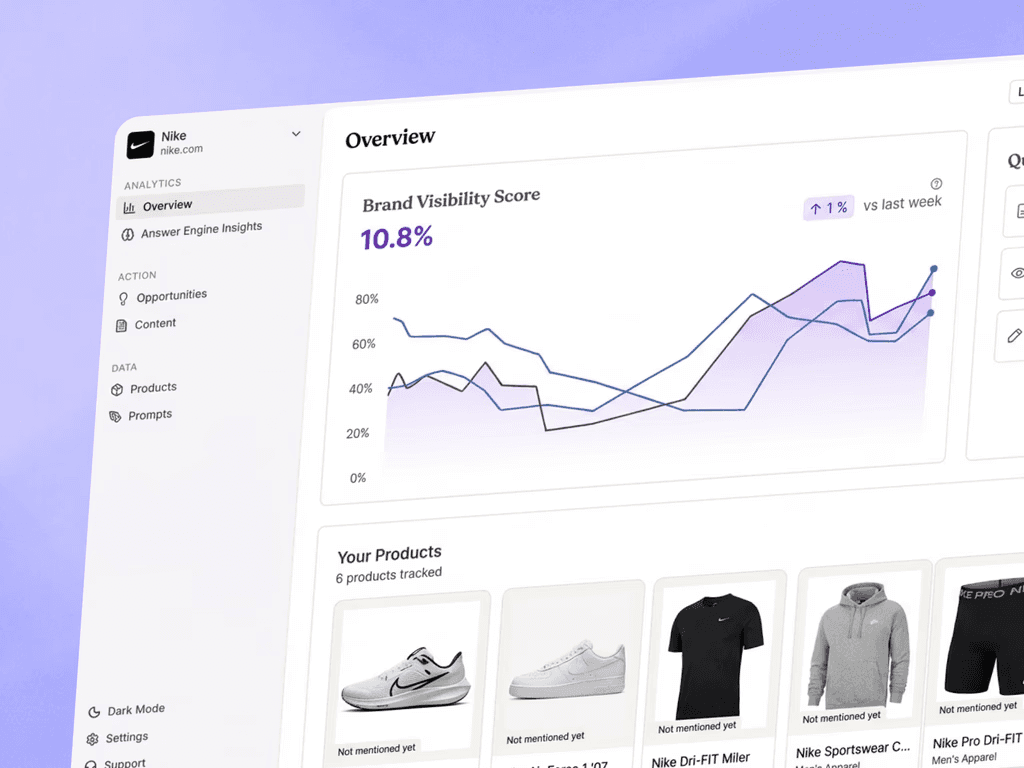

Analyze AI driven brand visibility across prompts, categories, and competitors to identify clear opportunities. Surface prioritized actions to improve accuracy, visibility, and recommendation likelihood across AI answers.

Kanishka Thakur

Jan 8, 2026

AI search engine optimization is the practice of earning brand mentions, citations, and clicks from AI-generated answers across platforms like Google AI Overviews, ChatGPT, Gemini, Perplexity, Copilot, and Claude. In 2026, measuring visibility means tracking when, where, and how your brand appears inside these answers, nudgenot just classic SERP rankings. Ecommerce brands feel the urgency: AI-generated suggestions are replacing clicks, contributing to a 22% drop in search traffic for many retailers, according to a recent PRNEWS analysis. To protect and grow revenue, you need a measurement-first approach: establish a baseline, implement tracking for AI referrals, analyze citation quality and sentiment, then iterate content and technical tactics. Nudge ties this together by integrating AI discovery, evaluation, and conversion data across commerce funnels, providing DTC and multi-brand retailers a single view of what AI answers drive visits and sales.

Understanding AI Search Engine Optimization

AI search engine optimization focuses on maximizing a brand’s presence inside AI-generated answers. Instead of competing for “10 blue links,” you compete for inclusion in conversational summaries, curated product lists, and cited sources within AI-powered search. Traditional keyword rankings still matter, but they no longer reflect full reach or influence along the buyer journey.

For commerce teams, the shift is practical: an answer that names your brand and links to a current, trusted page can replace a series of clicks. With AI suggestions displacing organic traffic for many retailers, prioritizing AI visibility is now a direct lever for demand capture. Success blends content clarity, technical structure, authority signals, and analytics that attribute sessions and conversions originating from AI answers.

Defining Generative Search Engine Optimization

Generative search engine optimization is the discipline of structuring content and entities so AI systems can retrieve, reuse, and summarize them correctly in real time. It extends answer engine optimization by tuning content for large language models, conversational queries, and dynamic prompt responses that produce brand citations and favorable summaries.

Common GEO tactics include:

Structuring answers for machine readability with clear schemas and concise, verifiable statements.

Using multimodal assets, short videos, charts, and images, that AI systems can reference in rich responses.

Building authority signals through reviews, PR mentions, and quality backlinks that reinforce brand trust.

Core Metrics for Measuring AI Search Visibility

Use a tight set of KPIs to quantify progress and tie visibility to revenue impact:

Metric | Description |

|---|---|

Mention Rate | Percentage of prompts where your brand or site is named in an AI answer, a core benchmark highlighted in Graph Digital’s guide to AI visibility metrics. |

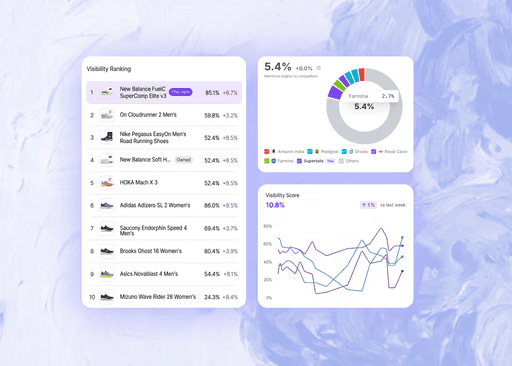

Positioning | Your placement within a multi-source generative answer or curated product shortlist. |

Citation Quality | Whether the answer links to accurate, current pages and preferred destinations. |

Sentiment/Tone | Positive, neutral, or negative framing of your brand and products. |

AI Referral Traffic | Sessions from LLMs as captured via analytics (e.g., chat.openai.com, perplexity.ai). |

Prioritize citation opportunities over raw visibility, brand mentions with high-quality links and favorable phrasing tend to drive the strongest downstream sales as AI answers replace traditional clicks. Track competitive share of voice, shifts in answer position, and sentiment trends over time to spot gains and risks early.

Establishing a Baseline with Manual Testing

Before you invest in tooling, run a two-week manual audit. Build a 50-prompt pack that covers your priority categories, value props, and product comparisons across ChatGPT, Gemini, Perplexity, Claude, and Copilot. Vary intent: informational, evaluative, and transactional.

Log each response in a simple sheet:

Was your brand mentioned? Where was it positioned?

What was the tone and factual accuracy?

Which URLs were cited? Were competitors included—and how were they framed?

This approach creates a reliable baseline, reveals content inconsistencies, and surfaces competitor benchmarks without upfront spend.

Selecting AI Visibility Tools to Scale Measurement

Once you’ve baselined, scale with purpose-built tools:

Nudge: Comprehensive coverage across diverse AI platforms, integrating discovery, evaluation, and conversion insights for impactful measurement.

Profound: Broad engine coverage (ChatGPT, Perplexity, Gemini, Copilot, and more) and enterprise-grade testing that mimics user behavior.

Semrush AI Visibility Toolkit: Tracks brand mentions in AI answers and benchmarks competitors.

Peec AI: Fast checks that show which engines mention you, how often, and in what context.

xFunnel.AI and lightweight dashboards: Quick monitoring for teams that need simple visibility snapshots.

What to look for:

Engine coverage across major LLMs and regions

Reporting depth on sentiment, citations, and answer positioning

Integrations with analytics and reporting stacks

Pricing flexibility and trials

For most brands, pairing an enterprise suite with a mid-market toolkit balances breadth with actionable insights.

Instrumenting Analytics to Track AI Referral Traffic

Close the loop by attributing sessions and conversions to AI answers:

In GA4, create referral filters and channel group rules that capture LLM sources like

chat.openai.com,perplexity.ai,gemini.google.com, andcopilot.microsoft.com. Use regex to consolidate variants (e.g.,(chat\.openai\.com|perplexity\.ai|gemini\.google\.com|copilot\.microsoft\.com)).Track AI-attributed sessions, bounce rates, assisted conversions, and product revenue.

Tag important landing pages so you can segment performance by citation type (how-to vs. comparison vs. product).

Linking visibility metrics to on-site outcomes shows which AI answers actually drive revenue. Nudge extends this further by unifying discovery (mention and citation data), evaluation (content and product interactions), and conversion (cart and checkout) so you can prioritize the answer formats and sources that sell.

Analyzing Visibility Trends and Citation Accuracy

Make visibility measurable over time:

Chart weekly mention rate, answer positioning, and sentiment across engines. Watch for sudden drops or rank swaps that indicate model updates or competitor moves.

Audit citation accuracy: flag hallucinations, outdated prices, or misattributed features. These often point to weak structured data, stale content, or entity conflicts.

Visualize answer-position trends by engine and topic cluster to see where structured improvements are paying off.

Diagnosing Content Gaps and Prioritizing Optimizations

Tools surface symptoms; your job is diagnosis:

Compare your semantic footprint to top competitors across target prompts. Where do they earn citations you miss? Which entities (brands, attributes, use cases) are they owning?

Identify structural issues: unclear product specs, missing FAQs, thin category pages, or inconsistent naming that confuses entity resolution.

Prioritize fixes by commercial impact: high-intent prompts, bestselling categories, and pages already receiving AI referrals should lead your roadmap.

Implementing Content and Technical Strategies for AI Visibility

Deploy tactics that make your content easy for AI systems to retrieve and reuse:

Apply schema markup (FAQ, Product, Review) to clarify page roles and attributes.

Use answer-first formatting: concise definitions, bullets, short paragraphs, and verifiable facts.

Add multimodal assets—short clips, comparison tables, and annotated images—to increase inclusion in rich answers.

Strengthen authority signals with reviews, expert quotes, PR mentions, and high-quality backlinks.

Iterating with A/B Testing and Measuring Impact

Treat AI visibility like a product:

Hypothesize improvements (e.g., new FAQ blocks, refined product specs, updated schema).

Implement structured changes on targeted URLs.

Measure pre/post differences in mention rate, citation positioning, and sentiment across engines.

Roll forward what lifts results; revert or revise what doesn’t.

Report downstream impacts- LLM-attributed sessions, assisted conversions, and revenue. so optimizations tie back to commercial ROI, not just visibility.

Practical Tips for Maximizing AI Search Rankings

Do use schema, concise answer-first content, and multimedia; don’t expect tools to diagnose every underlying issue without human review.

Capture “People Also Ask” and near-PAA phrasing. these are frequent inputs for AI-generated summaries.

Right-size your stack: solo teams can start with free or low-cost tools and manual tracking; larger retailers should budget for enterprise coverage across engines and regions.

Proactive, evidence-driven optimization often unlocks triple-digit gains in brand citations year over year, as seen in industry analyses.

Frequently Asked Questions

What metrics should I track to measure AI search visibility?

Track mention rate, citation frequency and position, sentiment, citation quality, AI-attributed traffic, and competitive share of voice to quantify influence and growth.

How do AI visibility tools differ from traditional SEO tools?

AI tools monitor brand mentions and links inside AI-generated answers, while traditional SEO tools focus on keyword rankings and classic SERP performance.

Which AI platforms are essential to monitor for search visibility?

Monitor Google AI Overviews, ChatGPT, Gemini, Perplexity, Copilot, Claude, and Meta AI, plus any vertical-specific engines relevant to your category.

What strategies effectively boost AI search rankings?

Utilize structured data, answer-first formatting, PAA-style questions, multimodal assets, and strong authority signals like reviews and quality backlinks.

Are AI visibility metrics fully reliable, and how should I interpret them?

Treat metrics as directional; cross-validate across tools and focus on citation accuracy, positioning, and traffic/conversion impact rather than absolute scores.

Looking for a tactical checklist and dashboards to get started fast? Explore Nudge’s playbook on AI search visibility tactics and our guide to AI search visibility tools and tracking.